Why Artificial Intelligence Needs Friction to Think Clearly

(Hint: We all do.)

The most reliable way to improve thinking—human or artificial—is not to make it smoother.

It is to change the constraints under which it operates.

This runs counter to most modern AI design intuition. We tend to assume that better intelligence emerges from cleaner pipelines, unified representations, and frictionless execution. Remove obstacles. Minimize delay. Let everything talk to everything at full bandwidth.

But smooth systems do not think better.

They think faster—and flatter.

What they lack is internal resistance.

Thought Improves When It Is Forced to Change Shape

In human cognition, insight rarely arrives in a single pass. It emerges when an idea must survive translation:

- from intuition to language

- from rough notion to formal reasoning

- from confidence to justification

- from one perspective to another that does not share its assumptions

Each translation is an opportunity for failure.

Weak ideas do not survive this process. Strong ones adapt.

This is why constraint improves thinking. It forces ideas to show their work.

When you deliberately shift tone, role, or perspective—editor, reviewer, skeptic, observer—you are not adding noise. You are creating internal edge zones where ideas must cross incompatible rulesets to persist.

That crossing is where reasoning sharpens.

Personas Are Not Fragmentation — They Are Review Passes

Using multiple personas is not about identity. It is about forcing interpretive constraints.

Each persona limits:

- what can be claimed

- how confidently it can be said

- what kinds of evidence are acceptable

- which failures are visible

An idea that sounds convincing in one voice may collapse entirely in another. That collapse is not a failure—it is a test.

This mirrors exactly what is missing in current AI systems.

Most large models speak from a single, continuous authority. There is no internal disagreement severe enough to interrupt a fragile thought. No role change that forces reinterpretation. No regime shift that asks, “Does this still hold if I slow you down, narrow your bandwidth, or raise the precision bar?”

As a result, hallucinations propagate smoothly.

Hallucination Is a Failure of Constraint, Not Knowledge

AI hallucinations are often framed as data or scale problems. They are neither.

They are architectural failures caused by systems being allowed to speak past the edge of what they actually know.

When a system is never forced to:

- downgrade confidence

- re-encode an idea in a different form

- justify itself to a slower, stricter process

- encounter structured disagreement

then approximation masquerades as truth.

Confidence becomes cheap.

In human terms, this is what happens when someone is never questioned—not because they are always right, but because no one has the authority or mechanism to slow them down.

Edge Thinking as a Review Engine

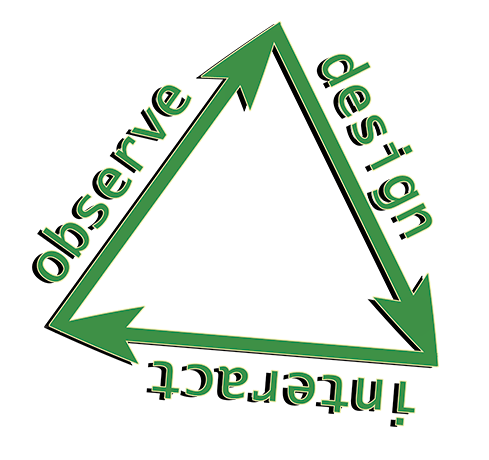

Edge Thinking reframes intelligence as a process of controlled self-opposition.

Ideas should not be optimized for speed to output.

They should be optimized for survival across transitions.

In practical AI terms, this means systems that:

- generate ideas in low-precision, associative regimes

- force those ideas through compression and summarization

- re-evaluate them under higher precision and stricter logic

- expose them to role-based critique (verification, skepticism, consequence analysis)

- discard ideas that cannot survive reinterpretation

This is not redundancy. It is selection pressure.

Creativity increases because exploration is cheap at the edge.

Accuracy increases because commitment is expensive at the center.

Why Constraint Increases Creativity

This is the counterintuitive part.

Constraint does not reduce creative output—it increases it.

When ideas must pass through multiple filters, creators are incentivized to:

- explore more broadly early

- accept failure quickly

- refine concepts iteratively

- abandon brittle notions without ego

The system becomes more playful and more disciplined.

This is true in writing, engineering, design—and intelligence itself.

Unconstrained systems produce confident mediocrity.

Constrained systems produce fewer ideas—but better ones.

Monitoring Is the Missing Layer

The most important function in human intelligence is not generation. It is self-observation.

We notice when we are confused.

We sense when an answer feels wrong.

We slow down when stakes rise.

Current AI systems largely lack this supervisory capacity. They generate, but they do not watch themselves generate.

Edge-based architectures create natural monitoring signals:

- disagreement between subsystems

- collapse under higher precision

- failure to compress meaningfully

- energy cost spikes without accuracy gain

These are not errors to suppress. They are signals to learn from.

Intelligence improves when a system learns which internal pathways produce reliable outcomes and which produce noise.

The Broader Implication

This is not just an AI design argument.

It is a statement about how knowledge survives.

Systems—biological, institutional, or artificial—that rely on smooth continuity fail when memory breaks. Systems that rely on documented transitions, role separation, and visible reasoning survive turnover.

Edge Thinking is not about being clever.

It is about being durable.

An Open Question

If intelligence improves when ideas are forced to survive constraint…

What other systems—technical, economic, institutional—are failing simply because they were never designed to argue with themselves?

That question remains open.

It should.

Link to first article on using constraint to improve ai.

Stuck on a problem and need a real solution?